DifferSketching: How Differently Do People Sketch 3D Objects?

Chufeng Xiao

1 School of Creative Media, City University of Hong Kong

2 Department of Computer Science, City University of Hong Kong

3 Wangxuan Institute of Computer Technology, Peking University

4 SketchX, CVSSP, University of Surrey

* Joint first authors

Accepted by SIGGRAPH Asia 2022 (Journal Track)

[Paper] [Dataset] [Code] [Supplemental Material]

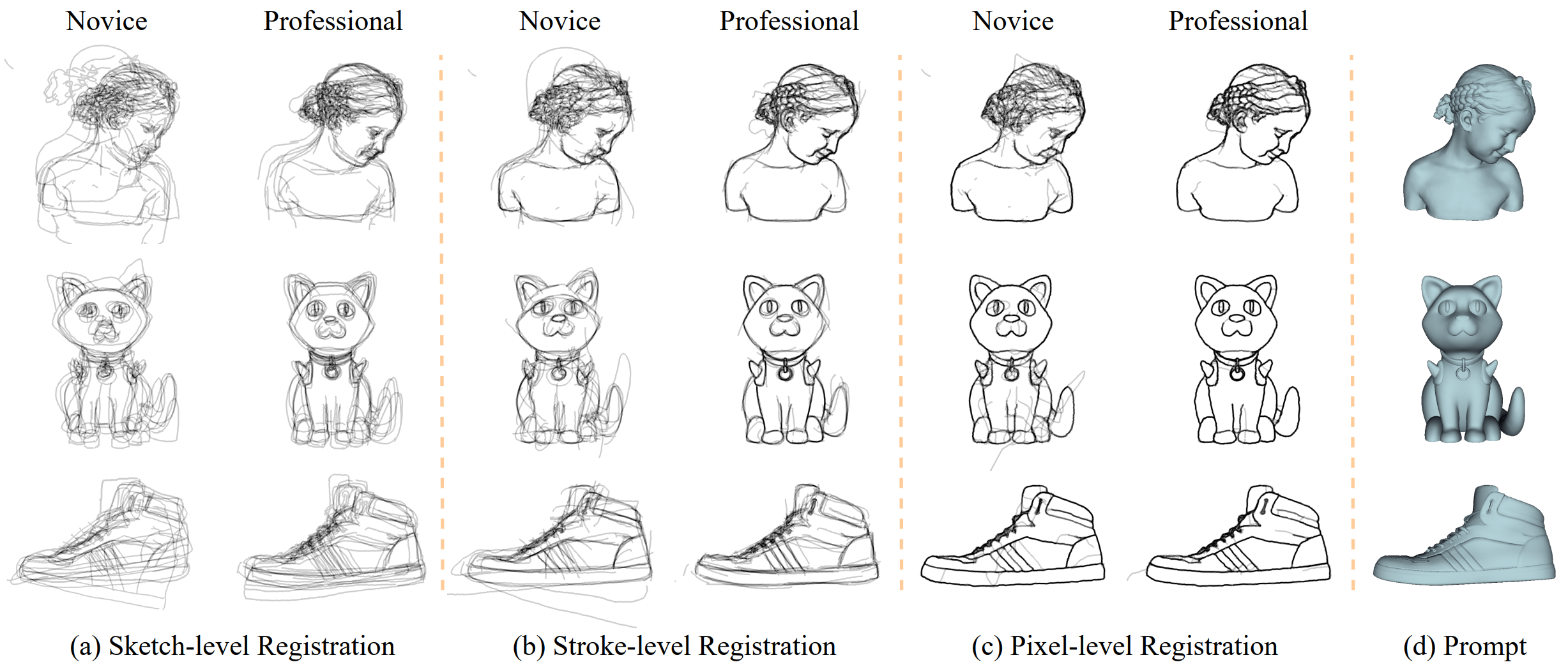

Fig 1: We present DifferSketching, a new dataset of freehand sketches to understand how differently professional and novice users sketch 3D objects (d). We perform three-level data analysis through (a) sketch-level registration, (b) stroke-level registration, and (c) pixel-level registration, to understand the difference in drawings from the two skilled groups.

Abstract

Multiple sketch datasets have been proposed to understand how people draw 3D objects. However, such datasets are often of small scale and cover a small set of objects or categories. In addition, these datasets contain freehand sketches mostly from expert users, making it difficult to compare the drawings by expert and novice users, while such comparisons are critical in informing more effective sketch-based interfaces for either user groups. These observations motivate us to analyze how differently people with and without adequate drawing skills sketch 3D objects. We invited 70 novice users and 38 expert users to sketch 136 3D objects, which were presented as 362 images rendered from multiple views. This leads to a new dataset of 3,620 freehand multi-view sketches, which are registered with their corresponding 3D objects under certain views. Our dataset is an order of magnitude larger than the existing datasets. We analyze the collected data at three levels, i.e., sketch-level, stroke-level, and pixel-level, under both spatial and temporal characteristics, and within and across groups of creators. We found that the drawings by professionals and novices show significant differences at stroke-level, both intrinsically and extrinsically. We demonstrate the usefulness of our dataset in two applications: (i) freehand-style sketch synthesis, and (ii) posing it as a potential benchmark for sketch-based 3D reconstruction.

Dataset

Our dataset is now available on Google Drive. The file folder tree is as follows:

xxxxxxxxxx[Category] - {obj}: 3D models - {mv-img}: Multi-view reference images rendered from 3D models - {tracing}: The tracings over the reference images - [Type]_png: The drawings rasterized from .json files - [Type]_json: The sequence data includes pressure, timeslot, drawing path, etc.

* [Category] indicates the category name, e.g. {Animal_Head}.* [Type] indicates {original} type (user drawings) or registered types ({gloabl} -> sketch-level, {stroke} -> stroke-level and {reg} -> pixel-level) from the user drawingsIf you download and use the dataset, you are deemed to agree to the license policy:

- The dataset is available for non-commercial research purposes only.

- All the 3D models of the dataset are obtained from the Internet which are not property of us. We are not responsible for the content nor the meaning of these models.

- All the multi-view images of the dataset are rendered from the 3D models.

- All sketches of the dataset are freehand drawn / traced by users we hired or registered using our registration method from user drawings.

- You agree not to reproduce, duplicate, copy, sell, trade, resell or exploit for any commercial purposes, any portion of the 3D models, images and sketches as well as any portion of derived data.

- We reserves the right to terminate your access to DifferSketching dataset at any time.

We will update more data and codes in the future. Please contact us via email (hongbofu@cityu.edu.hk) if you have any questions about the project.

* Last update on 23 September 2022.

Citation

xxxxxxxxxx@article{xiao2022differsketching, title={DifferSketching: How Differently Do People Sketch 3D Objects?}, author={Xiao, Chufeng and Su, Wanchao and Liao, Jing and Lian, Zhouhui and Song, Yi-Zhe and Fu, Hongbo}, journal = {ACM Transactions on Graphics (Proceedings of ACM SIGGRAPH Asia 2022)}, volume={41}, number={4}, pages={1--16}, year={2022}, publisher={ACM New York, NY, USA}}